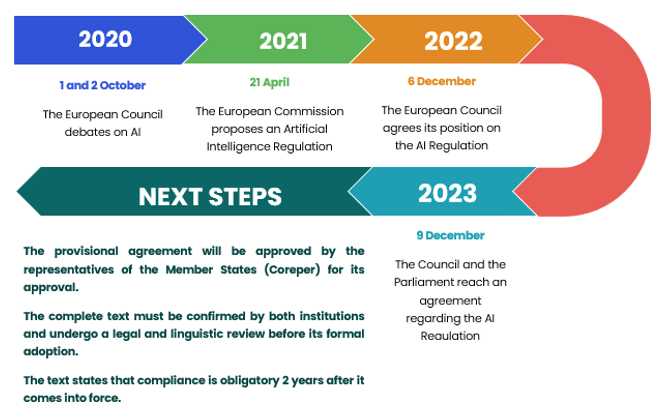

The proposal for the European Regulation on Artificial Intelligence places a particular emphasis on Governance. Which is regulated in its title VI. In this title, a series of control bodies are established, one of which relates to the obligation to designate Artificial Intelligence Supervisory Authorities in each Member State.

For this reason, the following laws have been cited as the background for its creation:

In the one hundred and thirtieth additional provision of Law 22/2021, of 28 December, on General State Budgets. The creation of the (AESIA) was established. The Agency is defined as having full organic and functional independence and that it must carry out measures to minimise significant risks to the safety and health of people. As well as to their fundamental rights, risks that may arise from the use of artificial intelligence systems.

Law 28/2022, of 21 December, to promote the ecosystem of emerging companies, known as the Startup Law. Also provides for the creation of the AESIA in its seventh additional provision.

Lastly, Royal Decree 729/2023, of 22 August, on the Statute of the Spanish Agency for the Supervision of Artificial Intelligence provides for its effective implementation with the constitution of the governing council. Within a maximum period corresponding to the entry into force of this royal decree.

Lastly, on 7 December 2023, the Secretary of State for Digitalisation and Artificial Intelligence published the names of the members of the Governing Council. Thus establishing this ground-breaking Agency. The creation of this Agency means that Spain becomes the first European country to have an authority with these characteristics and it anticipates the entry into force of the European Regulation on AI.

This Agency, based in Galicia, will not be the only national authority. Since the proposal for the European Regulation on Artificial Intelligence states that Member States will have to designate competent national authorities and select a national supervisory authority among them.

Functions of AESIA

- Oversee compliance with applicable regulations in the scope of Artificial Intelligence. Having the power to impose penalties for possible violations thereof.

- Promote testing environments that enable AI Systems to be correctly adapted to reinforce user protection and avoid discriminatory biases. For this reason, Royal Decree 817/2013, establishing a controlled testing environment for assessing compliance with the proposal of the European Regulation on Artificial Intelligence was published on 8 November 2023.

- Strengthen trust in technology, through the creation of a voluntary certification framework for private entities. Which makes it possible to offer guarantees on the responsible design of digital solutions and ensure technical standards.

- Create knowledge, training and dissemination related to ethical and humanistic artificial intelligence to show both its potential and opportunities for socioeconomic development. Innovation and the transformation of the productive model, as well as the challenges, risks and uncertainties posed by its development and adoption.

- Stimulate the market to boost innovative and transformative practical initiatives in the scope of AI.

- Help to implement programs in the scope of Artificial Intelligence through agreements. Contracts or any other legally binding instrument to support the implementation of Artificial Intelligence programs.

Comparisions with the Spanish Data Protection (AEPD)

In general, these two authorities have similar functions. Although each in its own field. In France, its data protection authority, CNIL, will be the one to have the powers of the Supervisory Authority within its borders.

Even then, AESIA and AEPD cannot be compared since the presidency of the Governing Council is the responsibility of the Secretary of State for Digitalisation and Artificial Intelligence. Who will directly propose who will be the general director of AESIA, whereas the Statute of the AEPD appoints its positions by parliamentary agreement.